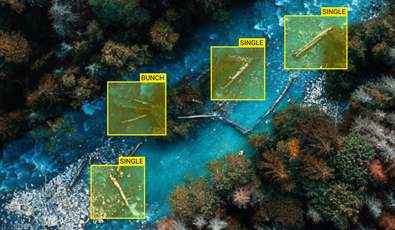

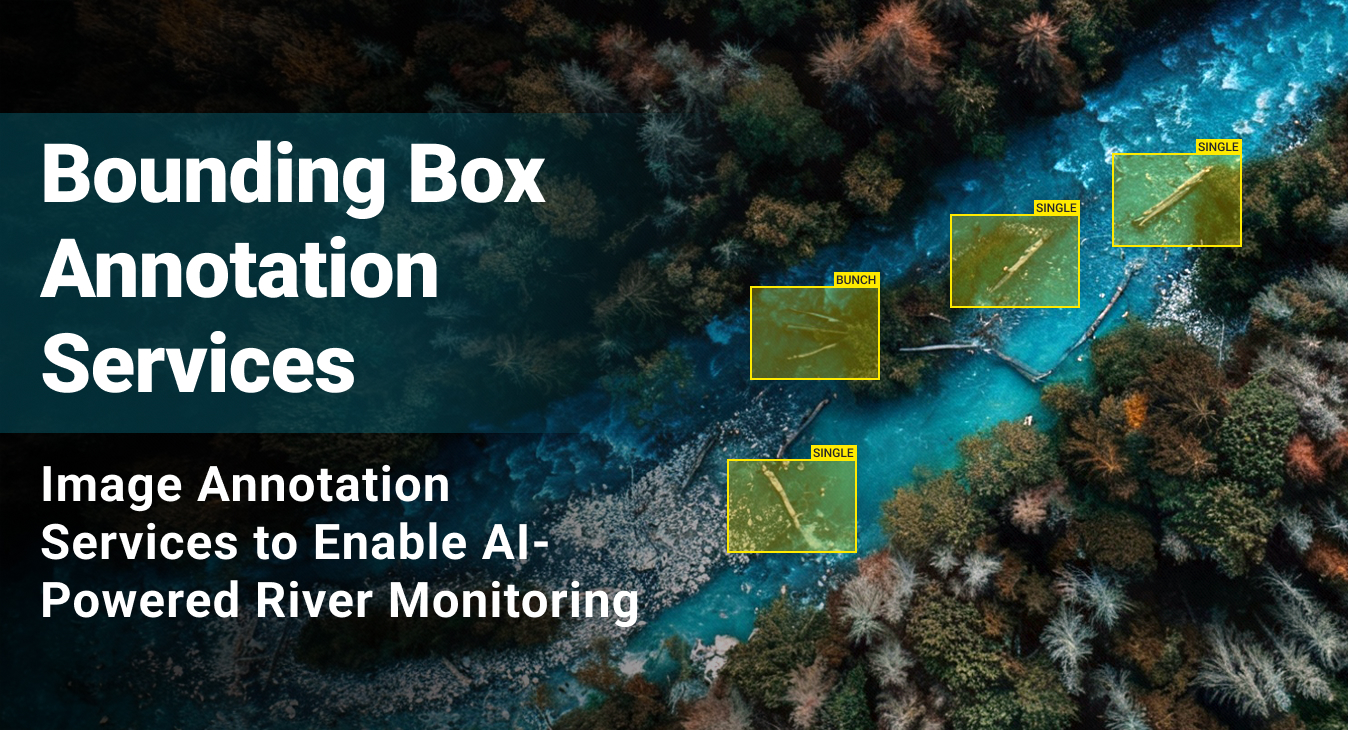

Precise bounding box annotation for high-resolution aerial river images to train an AI-powered river flow obstruction detection system using the client’s proprietary data annotation tool.

1,500 to 2,000

Images Labeled per Week98%

Labeling Accuracy Rate Maintained<1%

Revision/Rework Rate- Service Image Annotation

- Platform Client’s Proprietary Annotation Platform

- Industry Environmental Monitoring / Forestry

15,000+

Images Annotated95%+

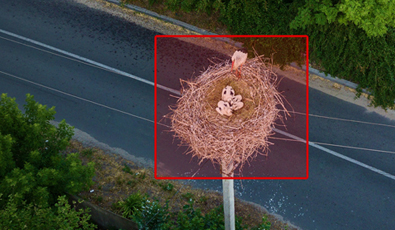

Annotation Accuracy- Service Image Annotation Services

- Platform Client’s Proprietary Annotation Platform

- Industry Wildlife Conservation / Energy

35%

Increase in Model Accuracy20%

Improvement in Traffic Flow Monitoring- Service Image Annotation Bounding Box Annotation Data Classification

- Platform CVAT

- Industry Urban Planning and Development

30%

Boost in Object Detection Accuracy20%

Increase in Overall Operational EfficiencyExpanded

Drone Tracking Capabilities- Service Video Annotation Services Infrared & Thermal Imaging Processing Bounding Box Annotation

- Platform CVAT

- Industry Security and Surveillance

65%

Improved AI Model Accuracy60%

Less Content Categorization Errors4-Month

Faster Model Development- ServiceData LabelingText LabelingVideo LabelingWeb Research

- Platform Client's Predictive Content Intelligence Platform

- Industry Media and Entertainment