Operational efficiency and automation are no longer nice-to-haves but survival necessities for businesses. Organizations have long relied on DevOps to streamline development and IT operations to achieve their desired efficiency benchmarks. However, today, as organizations are transforming with AI/ML at the core, this practice must extend beyond traditional software development lifecycles.

The complexity of AI/ML, including working with multiple datasets, automating ML model training, tracking versions, and maintaining performance as circumstances change, has not been adequately addressed by traditional DevOps best practices.

While a DevOps team deploys applications, data science teams often struggle to move a single model from prototype to production using traditional DevOps methods. The AI/MLOps (Artificial Intelligence/Machine Learning Operations) framework solves this. Think of it as DevOps evolved for the AI/ML era, extending your existing automation to cover the entire ML lifecycle from data engineering to production monitoring.

But, treating MLOps and DevOps as competing methodologies when they are actually complementary ones is where the majority of organizations go wrong. Read more to understand where they overlap, where they diverge, and how to strategically implement each based on your current AI maturity level.

DevOps vs. MLOps: An Overview

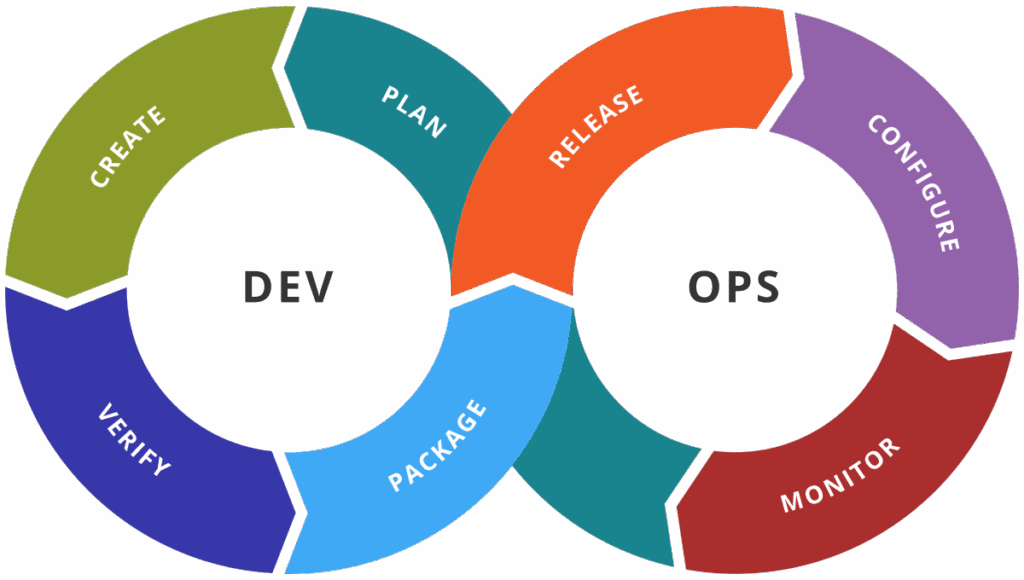

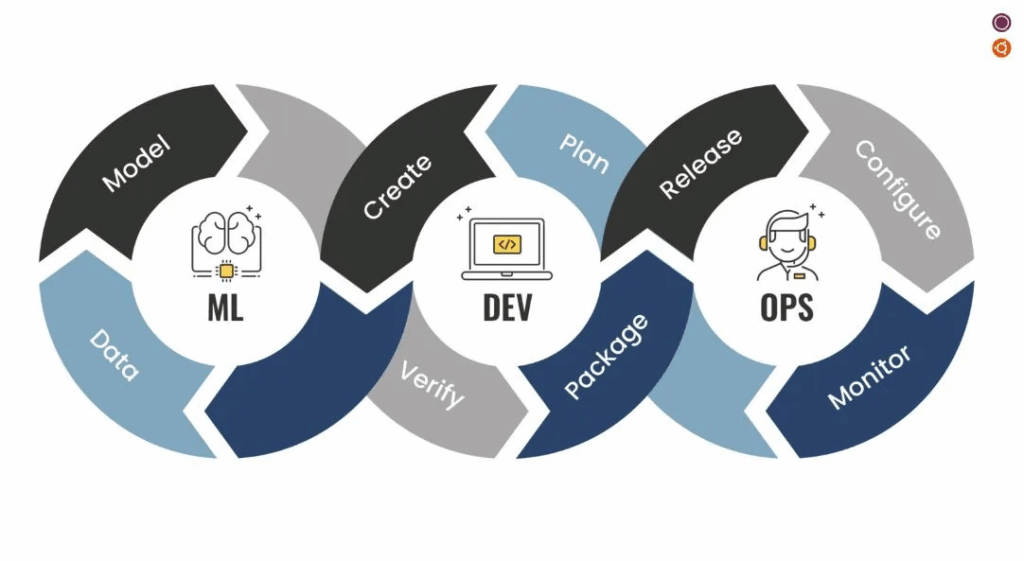

On a surface level, here is what a DevOps and MLOps cycle would look like –

What is DevOps?

Consider DevOps as turning your software development process into a coordinated swimming team instead of a relay, step-based race. DevOps establishes cohesive teams where development and operations work in perfect sync, rather than requiring developers to transfer code to operations all at once with little communication.

The Result: Software releases transform from risky weekend events into routine deployments that happen multiple times per day.

What is MLOps?

Unlike software, ML models behave more like complex machinery, whose outputs need consistent monitoring for performance drift, regular recalibration with fresh data, and systematic maintenance as conditions change. MLOps is the guiding framework, or in other words, the ‘control room’ that enables ML engineers to do the same.

The Result: AI/ML models run reliably in production and generate predictable outcomes.

Going Further: DevOps vs. MLOps

Fundamentally, both MLOps and DevOps are the same.

What is Common Between MLOps and DevOps?

They both adhere to the same automation philosophy, which uses process automation to lessen dependency on labor-intensive, error-prone manual operations. Second, a collaborative work culture that dismantles silos remains the operational focus of both strategies.

Finally, both DevOps and MLOps emphasize the importance of continuous monitoring for problem-solving and performance optimization. Lastly, the fundamental CI/CD principles of using Docker containers for continuous integration and delivery remain applicable whether it is a software workflow or an ML pipeline.

What is the Key Difference Between MLOps and DevOps?

MLOps is not inherently a replacement for DevOps, but an evolution that builds on the latter’s principles and best practices. As DevOps optimizes software development lifecycles, MLOps adapts these practices to address the bottlenecks in ML and data engineering pipelines. The difference lies in the areas of focus, tools utilized, and team structures.

Let’s read more on how each diverges.

MLOps vs. DevOps: Focus Area and Workflows

The focus bifurcates, being a primary difference between MLOps and DevOps. DevOps, by definition, revolves around core software development and IT operations. It unifies dev and ops teams across all stages of the process.

At the same time, MLOps frameworks are designed around ML workflows. They automate the steps involved in core machine learning—data integration & processing, model selection & training, integration, and deployment.

MLOps vs. DevOps: Core Tasks

Typically, DevOps best practices focus on:

- Code version control and automated testing

- Infrastructure provisioning and configuration management

- Application deployment and rollback procedures

- System monitoring and incident response

MLOps best practices extend these fundamentals to include:

- Dataset versioning and data quality validation

- Automated model training and hyperparameter optimization

- Model performance monitoring and drift detection

- Continuous model retraining and A/B testing of model versions

MLOps vs. DevOps: Team Structures

Another key distinction between MLOps vs. DevOps lies in how teams are composed in each approach. In DevOps, the to-and-fro occurs between software developers and operations teams to optimize the delivery pipeline against agreed-upon requirements and SLA benchmarks.

In MLOps, the process is slightly more complicated as there is a greater need for involvement from data scientists, ML experts, and operations teams. This is also because AI/ML-generated outputs must be thoroughly monitored and optimized in production environments.

MLOps vs. DevOps: Tools & Technologies

In a DevOps framework, teams often rely on the following tools:

- Jenkins: For server automation and CI/CD delivery.

- Docker: For containerizing applications for easier development, testing, and production.

- Kubernetes: For managing containerized applications.

- Terraform: For leveraging infrastructure as code on cloud platforms such as AWS, Azure, Google Cloud, etc.

Similarly, Python’s TensorFlow Extended (TFX), Apache Airflow, H2O.ai, and MLflow are widely utilized tools in an MLOps framework. AWS MLOps services and other cloud-managed services are also used by many organizations to achieve autonomy in ML workflows.

MLOps vs. DevOps: Outcome Goals

Additionally, the two methods produce different results. Teams seek to achieve easier scaling, operational stability, and faster software delivery through DevOps automation. The objective shifts to the smooth development, training, and deployment of ML models in production when an MLOps framework is put in place. This is accomplished by ensuring that all ML models function consistently, regardless of new data or changes in the environment over time.

MLOps vs. DevOps: Challenges

Both MLOps and DevOps teams face distinct implementation challenges, such as:

Data Quality

The primary focus in DevOps remains on application code, which largely stays static once deployed. However, in an MLOps framework, the output varies depending on the quality of data fed to the ML algorithm for training. Hence, ensuring data integrity and consistency throughout the ML lifecycle is a continuous challenge.

Versioning

This is another challenge that is more adverse in MLOps workflows, as tracking multiple versions and training sessions becomes tedious and complex when dealing with data at scale.

Collaboration

DevOps promotes collaboration between developers and operations teams, helping avoid anticipated bottlenecks such as communication delays. However, MLOps involves additional stakeholders beyond the typical dev and ops teams. There are data scientists, ML engineers, business teams, and a few other people. Aligning all at once becomes complicated without project management tools (Jira, Trello, Git), regular stand-ups, and a tailored MLOps strategy.

Compatibility

DevOps tools are widespread and readily available, having been around for years. Platforms like Jenkins, Docker, etc., are easily integrated with a wide range of tech stacks and have become a staple in today’s AI-integrated development space.

Conversely, MLOps tools, such as TensorFlow Extended and Kubeflow, are relatively new and compatible with modern tech stacks and architectures, limiting their utility in legacy software development frameworks.

Scalability & Infrastructure Requirements

DevOps is mainly focused on scaling software applications and managing infrastructure, but the demands of MLOps go beyond that. ML workflows require extensive computational resources, especially when you have voluminous training datasets. A proper cloud environment, robust GPUs & other hardware are often necessary.

MLOps vs DevOps: Key Differences Summary

| Aspect | DevOps | MLOps |

|---|---|---|

| Primary Focus | Software development and IT operations | Machine learning workflows and AI model lifecycle |

| Core Workflow | Code → Build → Test → Deploy → Monitor | Data → Model → Train → Validate → Deploy → Monitor → Retrain |

| Team Composition | Software developers + Operations teams | Data scientists + ML engineers + Operations teams + Business stakeholders |

| Key Tasks | You hire DevOps engineers for:

|

You hire MLOps engineers for:

|

| Primary Tools |

|

|

| Outcome Goals |

|

|

| Data Handling | Static application code | Dynamic, ever-changing datasets requiring continuous validation |

| Versioning Complexity | Code and configuration files | Code + datasets + models + hyperparameters + experiments |

| Performance Monitoring | Application uptime and system metrics | Model accuracy + data drift + performance degradation |

| Infrastructure Needs | Standard compute resources | High-performance GPUs, extensive computational resources, and cloud environments |

| Collaboration Scope | Development ↔ Operations | Development ↔ Data Science ↔ Operations ↔ Business Teams |

| Tool Maturity | Established, widely compatible tools with an extensive ecosystem | Newer tools with limited legacy system compatibility |

| Primary Challenges |

|

|

| Scalability Focus | Application scaling and infrastructure management | Computational resource scaling for training + inference workloads |

Which Framework Suits your Existing Workflows, DevOps or MLOps?

You will have to think strategically in order to make a choice between DevOps and MLOps, given that it isn’t a binary this-or-that decision. It depends on several high-impact factors such as your current AI/ML maturity (whether you’ve adopted it deeply or are yet to redesign your processes with AI at the core), financial scope, business objectives, and overall organizational readiness.

Let us guide you through this decision: MLOps vs. DevOps.

Organizational AI Maturity Assessment

→ If your organization is still into traditional software development with minimal AI integration, investing in DevOps best practices would suffice.

→ If you’re running pilot ML projects with data science teams creating models that rarely reach production, consider starting with DevOps excellence first. We recommend layering in selective MLOps capabilities afterward.

→ If you have redesigned your processes with AI/ML and have started gaining value, MLOps frameworks are no longer optional, but critical.

Alignment with Business Objectives

→ DevOps best practices deliver immediate ROI if your primary goal is maintaining operational stability and faster feature delivery for existing products.

→ When you plan to integrate AI capabilities or when AI capabilities differentiate your products or create new revenue streams, you should systematically invest in some of the top MLOps tools or services.

→ In high-stakes industries like finance, aviation, and healthcare, extensive model governance and auditability are non-negotiable. Implementing MLOps frameworks would be a more advisable approach here, as MLOps best practices inherently provide the necessary tracking, versioning, and compliance capabilities.

Organizational State

For DevOps-Mature Organizations:

Your existing automation, collaboration culture, and technical infrastructure provide a 6-12-month head start in implementing MLOps frameworks. With this foundation in DevOps pipeline stages, it becomes easier to extend CI/CD for ML workflows.

For DevOps-Emerging Organizations

Trying to integrate MLOps frameworks without mastering DevOps best practices typically fails. Invest 6-9 months in strengthening your development and operations practices first.

DevOps vs. MLOps: Decision Matrix

| Decision Factor | When to Choose DevOps | When to Choose MLOps |

|---|---|---|

| AI/ML Maturity | Traditional software development with minimal AI integration. | AI/ML is already integrated into processes and delivering business value. |

| Pilot ML Projects | Data science teams building models that rarely reach production—focus first on DevOps excellence. | After achieving DevOps maturity, layer in MLOps to streamline ML model deployment and monitoring. |

| Business Objectives | Prioritize operational stability, faster feature delivery, and continuous software improvement. | When AI capabilities drive differentiation, revenue generation, or are integral to product innovation. |

| Industry Context | Standard software environments with low compliance demands. | High-stakes industries (finance, aviation, healthcare) require model governance, auditability, and compliance. |

| Organizational Readiness | Teams and workflows are still developing in terms of automation, collaboration, and CI/CD maturity. | DevOps-mature organizations ready to extend CI/CD pipelines for ML workflows (6–12 month implementation head start). |

| Implementation Roadmap | Strengthen development and operations practices for 6–9 months before expanding. | Build on existing DevOps maturity to integrate data versioning, model tracking, and monitoring under MLOps. |

The Way Forward

True autonomy and efficiency lie not in choosing between implementing an MLOps framework and sticking to traditional DevOps best practices, but in making sequential investments in both. Based on your current organizational state and business priorities, you should aim to create integrated strategies in which DevOps establishes the operational foundation that enables faster, cheaper, and more reliable implementation of MLOps frameworks.

At SunTec India, we recognize how crucial this decision is and acknowledge its impact on your organization. This is why we work closely with seasoned DevOps and MLOps consultants who can assess your workflows and infrastructure readiness to determine the ideal approach. Share your requirements at info@suntecindia.com for a no-obligation consultation.