My team and I often work on off-page marketing for our clients. Because many of these companies are leaders in their respective circles, the quality of guest blogging we do on their behalf has to be, by default, stellar. Over the last year, we have been facing a single, persistent roadblock on that path.

"We are sorry to inform you that the content submitted to our website as a guest post can not be published because we have found the content to be AI-generated."

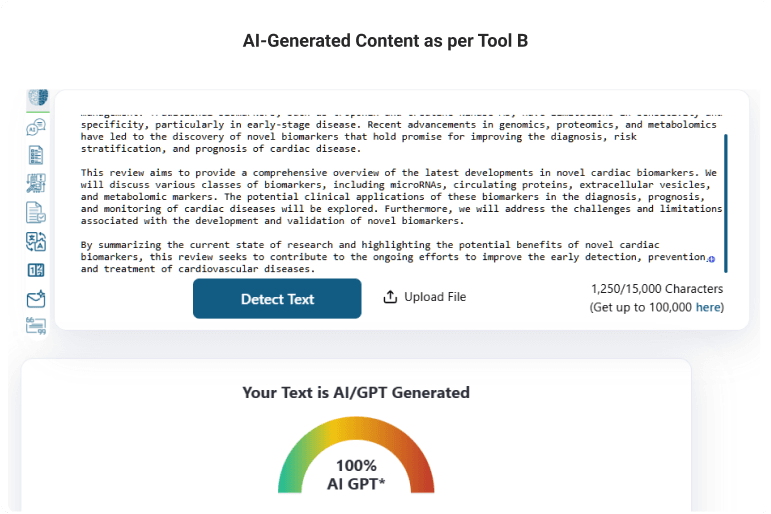

Majority of website owners consider AI-generated content a problem. I don't blame them because, well, Google! But then again, they use AI content detectors to check it (the results of which may not be dependable and trustworthy), which is paradoxical.

This situation highlights a growing tension in the digital landscape: the increasing reliance on AI and the simultaneous mistrust of it. As marketers, we find ourselves at the crossroads of this paradox, forced to navigate the challenges posed by AI content detection tools, which, while intended to preserve content integrity, often fall short of understanding the nuances of human-like text generated by advanced algorithms. Ironically, the very tools designed to protect against AI abuse are becoming barriers to legitimate content dissemination.

The Reliability of AI Detection Tools Is a Hotly Debated Topic

This is true not just among marketers but also among educators, content creators, researchers, and those actively involved in the evolution of AI technology.

A recent paper from Stanford Scholars puts it like this: GPT detectors are biased against non-native English writers and may unintentionally penalize writers with constrained linguistic expressions.

I quote a press release from OpenAI on January 31, 2023, where they acknowledged a significant limitation- "Our classifier is not fully reliable. In our evaluations on a "challenge set" of English texts, our classifier correctly identifies 26% of AI-written text (true positives) as "likely AI-written," while incorrectly labeling human-written text as AI-written 9% of the time (false positives)." This candid admission was followed by another important piece of information- "Our classifier has a number of important limitations. It should not be used as a primary decision-making tool, but instead as a complement to other methods of determining the source of a piece of text."

Also, check out the AI writing detection update from Turnitin's Chief Product Officer, released on May 23, 2023. The release quotes Annie Chechitelli, Chief Product Officer, Turnitin, saying- "We might flag a human-written document as having AI writing for one out of every 100 human-written documents. When a sentence is highlighted as AI-written, there is ~4% chance that the sentence is human-written."

These inaccuracies are much beyond just technical glitches; they have real-world consequences, like harming academic reputations and weakening trust in research. But I digress.

That was almost a year and a half ago. Since then, OpenAI has yet to release a new AI classifier capable of reliably indicating AI-written content. The absence of an updated tool suggests that the challenges inherent in AI detection may be more complex than initially anticipated. Even Turnitin, in its July 2024 release note, cites that to avoid the potential incidence of false positives, no score or highlights are attributed for AI detection scores in the 1% to 19% range.

All of this raises important questions about the current state of AI-generated content detection technology- if the leading organizations in AI development have not yet found a solution, what does that mean for the rest of us who rely on these tools?

The Mechanics of AI Detection Tools (that we Know of)

AI detection tools are supposed to be like lie detectors for text. They analyze writing style, grammar, vocabulary, and even the rhythm of sentences to spot the subtle differences between human and AI-generated content.

These tools have been rumored to employ various techniques for AI content detection, including:

- Linguistic Analysis: Examining syntax, grammar, and style to identify patterns that may differ from human writing

- Statistical Methods: Using probabilistic models to detect anomalies in word usage and sentence structure

- Neural Networks: Employing deep learning models trained on vast datasets to differentiate between human and AI text

On Paper, this Works too Well. In Reality, Not so Much!

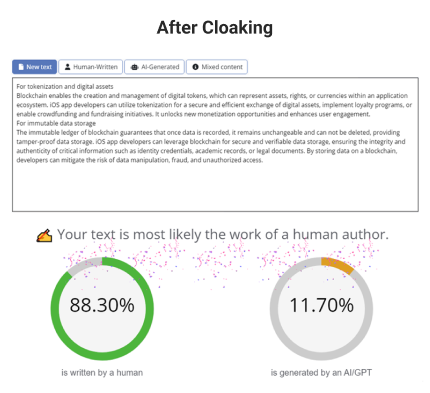

AI detection tools often produce inconsistent results. For instance, a piece of content might be flagged as human-written in one instance and AI-generated in another. This inconsistency undermines the credibility of these tools.

Here are a couple of examples I used for this experiment to showcase just how the result of AI-generated content detection tools like Copyleaks, ZeroGPT, GPTZero, etc., vary.

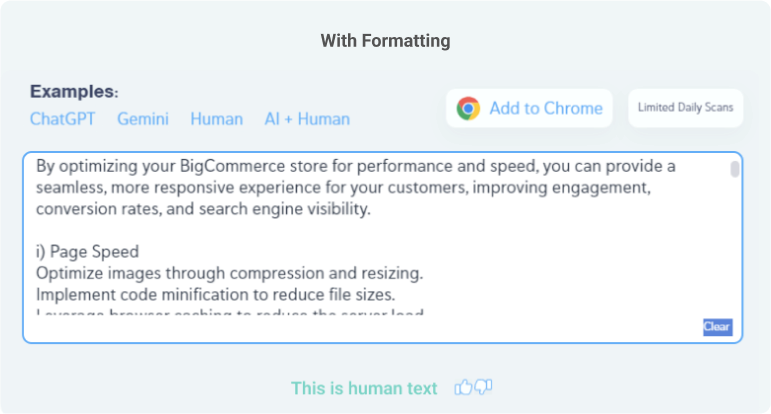

- Text Formatting and Structure: Minor formatting changes, such as adding bullet points, inserting headers, changing font styles, adding spaces, and extra commas, can lead to different results. For example, a plain text article might be flagged as human-written, but when formatted into sections with bullet points and headers, the same content might be detected as AI-generated. This discrepancy occurs because AI detection tools often rely on structural cues to differentiate between human and AI text.

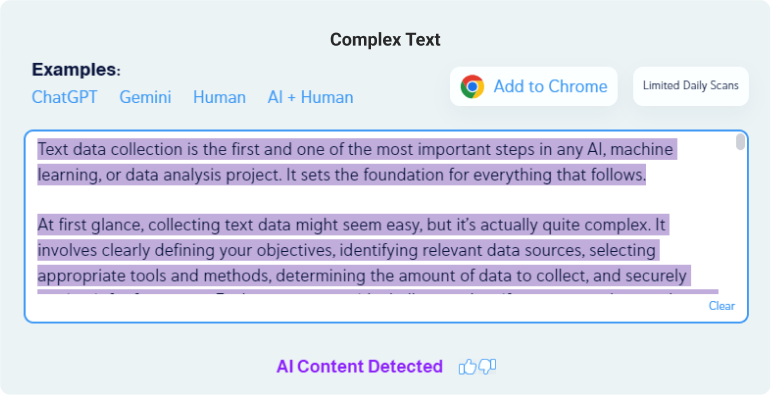

- Writing Style and Complexity: AI tools can mimic various writing styles, making it challenging to distinguish between human and AI-generated content. For instance, an AI trained in classic literature can produce text that closely resembles the writing style of famous authors like Shakespeare or Jane Austen. This ability to emulate diverse styles complicates the identification process, as the detection tool must differentiate between genuine human creativity and AI-generated imitation. Further, the distinction between human-written and AI-generated text, particularly when one is more complex or sophisticated than the other, often arises from how people perceive and evaluate language. More often than not, complex text might be identified as human-written, while simpler text gets flagged as AI-generated.

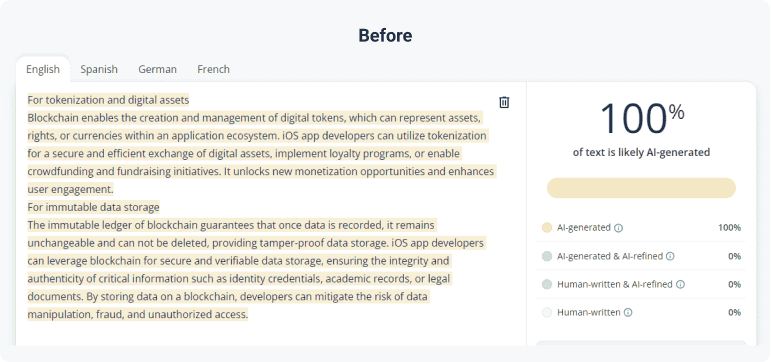

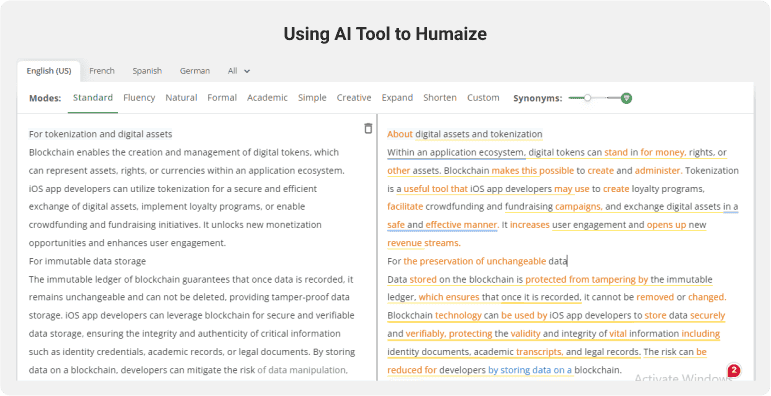

- Using Paraphrasing Tools to Humanize Content: Paraphrasing tools are often used to make content sound more natural and relatable, but they can also make it harder for AI detection tools to accurately assess whether the content is human-written or AI-generated. When content is rephrased to sound more human, it may trick detection tools into identifying AI-generated text as human-authored. This adds another layer of complexity to the detection process, as the subtle shifts in language and tone can blur the lines between human and AI writing.

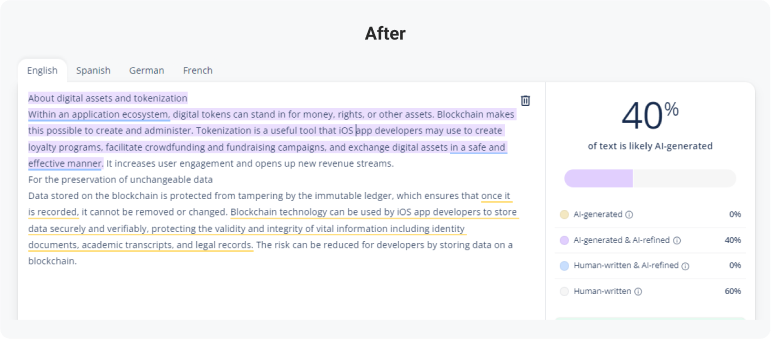

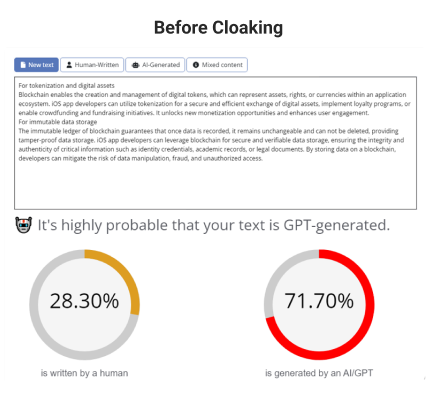

- Using Text-Cloaking Tools: Text-cloaking tools are designed to manipulate or obfuscate certain characteristics of AI-generated content to evade detection. These tools can alter text subtly, such as changing synonyms, adjusting sentence structure, or adding invisible characters, making it difficult for AI detection tools to accurately identify the content's origin. This deliberate obfuscation not only challenges the effectiveness of AI detection but also raises ethical concerns, as it can be used to deceive audiences or bypass content moderation systems.

- Topic and Context: The subject matter can significantly influence detection accuracy. Certain topics, such as highly technical or niche subjects, might be more prone to AI generation due to the vast data available for training AI models in these areas. For example, AI-generated scientific articles or technical manuals might be harder to distinguish from human-written content because AI can generate highly specific and accurate information within these domains. On the flip side, due to the extensive use of jargon, a technical article written by a human may be misidentified as AI-generated.

- AI Model Training Data: Detection tools trained on outdated models may not accurately identify text generated by newer AI systems. As AI technology advances rapidly, detection tools must be updated frequently to keep pace with the latest AI models. For instance, a detection tool trained on data from GPT-2 might struggle to identify text generated by GPT-3 or GPT-4, as these newer models have significantly improved language generation capabilities.

Also, the Difference between Human and AI-Generated Content Is Becoming a Bit too Subtle

The principle is straightforward- AI-generated text mimics human writing. However, the human language is inherently variable, with various styles, dialects, and idioms, AI-generated text can fall within these variations in several seemingly unpredictable patterns. An AI content detection tool's pattern recognition algorithm has to differentiate the subtleties between those patterns without a predefined database to reference. The complexity of this challenge is high and increasing manifold by the day.

And, as AI learns from humans, so do we—or at least the less skilled writers do. They can unknowingly start to mimic texts they have read online (which may be created by AI and may or may not be of good quality). They can start to adopt commonly used phrases, words, linguistic patterns, and so on. This intermingling of the species affects and infects both, and the result is a generative AI that is indistinguishable from human speech or text.

Given that scenario, how could any AI text detector claim to tell them apart? This makes it difficult to rely on AI detection tools.

AI Detection Tools Are Far from Perfect and Demand Caution

The dual challenges of false positives and negatives are central to understanding the current limitations of these technologies. They are designed around known patterns, i.e., a static nature of detection that is rapidly being outdone by the evolving capabilities of AI content generators.

There is a growing call to use these tools as part of a broader strategy that includes human judgment and alternative verification methods instead of relying solely on them to mark content as AI-generated or a human's work.

Rohit Bhateja

Rohit Bhateja, Director of Digital Engineering Services and Head of Marketing at SunTec India, is an award-winning leader in digital transformation and marketing innovation. With over a decade of experience, he is a prominent voice in the digital domain, driving conversation around the convergence of technology, strategy, customer experience, and human-in-the-loop AI integration.