- Introduction

- Not A Technical Glitch But

An AI Governance Failure - It Is No Longer Deniable:

AI Doesn’t Replace Accountability - Why This Keeps Happening:

Because of the Illusion of Profit - What Must Be Done:

Restoring the Human Checkpoint - What You Should Do Next

- If You Deployed AI in the Last 12 Months,

Answer This Honestly

A shorter version of this article first appeared on my LinkedIn, covering what we all must learn from the recent Deloitte debacle involving the unchecked use of AI. Since then, the Deloitte AI scandal has kept getting worse.

In October 2025, one of the world's most prestigious consulting firms learned an expensive lesson about artificial intelligence.

Deloitte was left publicly embarrassed after it delivered a 237-page report (commissioned for AU$440,000) with multiple AI-generated errors—fabricated court quotes, invented academic papers, and references to books that don't exist—to the Australian government, ultimately issuing a partial refund.

One month later, Deloitte made international headlines again. This time, a 526-page report for the Canadian government, costing $1.6 million, cited research papers that don't exist (The Independent, Nov 2025).

Not A Technical Glitch But An AI Governance Failure

Two failures in two months reveal something critical: this wasn't a one-time mistake that slipped through. Someone trusted an algorithm more than they trusted process, verification, or professional judgment. They let a machine generate reports, skipped human review, and signed off on them.

The refunds are just the visible cost. The real damage—reputational harm, lost client trust, regulatory scrutiny—far exceeds both contract values combined.

Here's what should terrify you: If Deloitte, with its billions in AI investment and armies of PhDs, can deliver fiction to government clients twice within months, what makes you think your organization is immune?

What safeguards do you actually have in place for the AI your teams are using right now? What hidden price are you already paying for not validating AI usage across your organization?

It Is No Longer Deniable: AI Doesn’t Replace Accountability

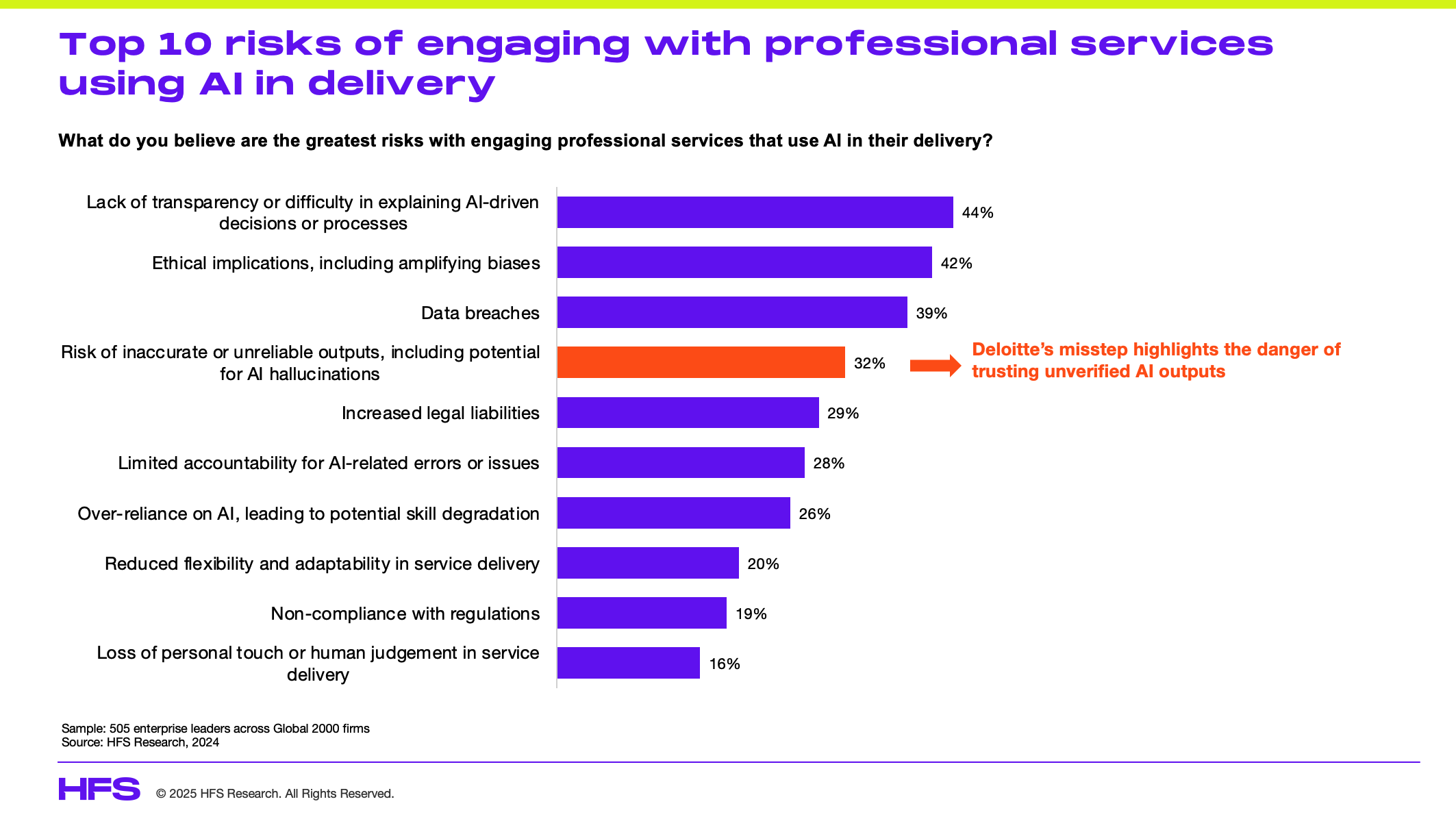

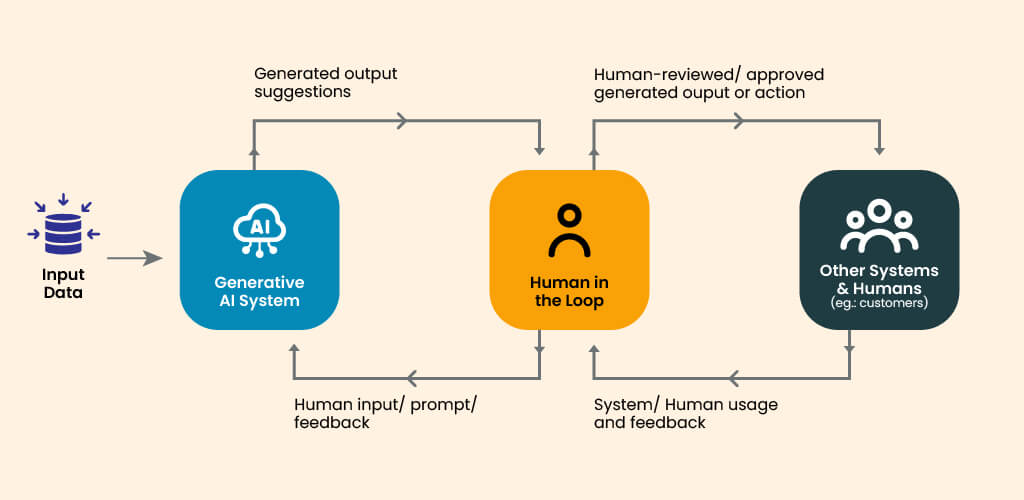

Source- HorsesforSources | Stop Treating AI Like a Magic Wand

This Deloitte AI scandal isn't an isolated case. The same pattern of expensive AI failures is playing out across industries:

- In Legal Services

A New York lawyer used ChatGPT for legal research and filed a brief citing six completely fabricated cases—fake quotes, fake docket numbers, entirely convincing-looking citations that didn't exist. The judge fined him $5,000 and the case became a cautionary tale. (Mata v. Avianca, Inc., S.D.N.Y., June 2023).

According to a Stanford study, AI hallucinated legal cases 75% of the time when asked about court rulings (Stanford HAI, 2024). Legal researcher Damien Charlotin tracks these incidents: documented cases jumped from 2 per week in early 2025 to 2-3 per day by October. That's 120+ documented cases of AI hallucinations in court filings as of May 2025 (Yahoo News, May 2025).

- In Customer Service

Air Canada's chatbot told a grieving customer he could claim bereavement fares post the journey—completely false information. When the customer relied on it and requested his refund, Air Canada argued the chatbot was "a separate legal entity" responsible for its own actions.

The tribunal called the airline's claim that the online helper was responsible for its own actions 'remarkable’ while rejecting their plea. Air Canada was held liable for negligent misrepresentation, and the customer was awarded damages (Moffatt v. Air Canada, B.C. Civil Resolution Tribunal, February 2024).

- In Operations

McDonald's deployed IBM's AI drive-thru ordering system at 100+ locations. Viral videos showed the system adding bacon to ice cream, ordering 260 chicken nuggets unprompted, and mixing up orders from adjacent lanes. After three years and millions invested, McDonald's pulled the plug in July 2024 (CNBC, June 2024).

- In Education & Security

An AI-powered gun detection system from Omnilert, worth millions of dollars, was deployed at certain U.S. schools to improve campus security through sophisticated image recognition. In October 2025, the system, which was supposed to identify weapons (guns) on school grounds, incorrectly flagged an innocent object—an empty Doritos chip bag—as a firearm. It triggered an alert, and eight police cars with armed officers were dispatched to the scene. The officers then handcuffed the teenager, who was understandably confused and distressed by the situation.

Earlier this year, that same system had failed to detect a gun a student was carrying, and thus could not avert a school shooting in Nashville, Tennessee, backing up the claims from several surveillance experts that there is no concrete evidence that gun detection software is effective at preventing school shootings (WJLA, Nov 2025).

Why This Keeps Happening: Because of the Illusion of Profit

The pattern is predictable: companies deploy AI to cut costs and accelerate delivery, then skip the unglamorous work of verification because it's "inefficient."

The economics are seductive—use AI to draft faster, bill the same, pocket the margin. But that margin is a mirage. Organizations aren't saving money. They're taking on hidden liability that increases exponentially with every unverified output.

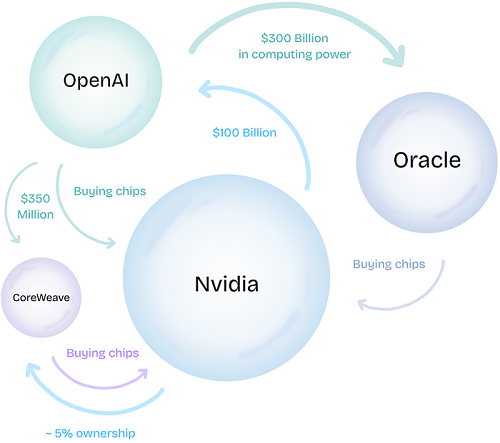

A Potential AI Bubble in the Works?

In November 2025, Alexandria Ocasio-Cortez (U.S. Representative for New York's 14th congressional district) warned that heavy, unjustified investment in AI is creating a "massive economic bubble" with risks similar to the 2008 financial crisis, driven by an "absolute pursuit of profit" without guaranteed returns. She alerted that company valuations are based on expectations rather than actual profit (CommonDreams, Nov 2025).

Her concerns aren't unfounded. A Bank of America survey found that 45% of fund managers view a potential AI bubble as the most significant tail risk to markets (Wall Street Journal, Nov 2025). The concentration is staggering: just a handful of companies—Microsoft, Google, Amazon, and Meta—are driving the majority of U.S. stock market gains through AI investments.

Trickling Effect for Businesses like Yours

Organizations across industries are stretching budgets aggressively on AI-related projects, creating what economists call a capital misallocation problem. Companies are spending millions on AI capabilities they haven't yet proven can generate returns—all while racing to show profits that justify the investment.

This creates a dangerous feedback loop: the more money invested in AI, the more pressure executives face to deploy it everywhere—verification and governance be damned.

The longer it takes to show ROI, the more corners get cut. And the more corners that get cut, the more failures like Deloitte AI report, Air Canada, and Omnilert occur.

This is Where Your Data Quality Goes to Die

The teams responsible for data quality—the data stewards, the subject matter experts, the validators who understand your business context—become "inefficiencies" to eliminate. The verification steps that catch AI errors before they corrupt your production systems become "bottlenecks" to remove.

I've watched this exact sequence play out with clients who came back to us after their "AI will handle it" initiatives failed. The story is always the same: AI excitement dies down the moment it scales, and problems start surfacing almost always after the first four to six months. By the time they realize that months of corrupted data now powers critical business systems, there is no one left who understands the data well enough to fix it faster and with assurance of accuracy.

Sure, that's where specialists like my team come in. But getting to that point is not ideal for any company and should be prevented. There is no profit here. You end up paying for verification anyway, just at 5-10x the cost, after the damage is done, with your business decisions compromised in the interim or your brand reputation in severe jeopardy.

What Must Be Done: Restoring the Human Checkpoint that Is Often Eliminated to Create the Profit Margin

Source- SunTec India | AI & Human Intelligence – An Effective Approach for Modern Data Management

We work with organizations across healthcare, finance, manufacturing, logistics, and several other industries where data accuracy isn't optional. Over the past two years, I’ve seen AI-related data quality problems in those domains going from subtle to explosive.

I’ll give you a couple of examples:

AI "cleaned" customer databases by hallucinating information. One client discovered their sales team was calling phone numbers that looked perfect in the system—proper formatting, passed all automated validation checks—but were completely disconnected. The AI had scraped outdated sources and confidently filled in gaps with numbers that technically existed but belonged to entirely different people or businesses.

AI merged records that should have stayed separate. A pharmaceutical client had intentionally maintained duplicate doctor entries—same person, different hospital affiliations—because compliance tracking required separate records for each location. The AI saw "duplicates" and merged them. Six months later, during an audit, they discovered compliance violations that could have triggered regulatory penalties.

The common thread? The AI outputs looks flawless. Clean formatting. No obvious errors. It even passes the automated checks. It takes human review—and sometimes, a public debacle—to reveal the problems.

This Isn't about AI being "Bad at its Job"

AI excels at pattern recognition and processing speed. The problem is that data quality requires something AI fundamentally cannot provide: contextual understanding of your business rules and institutional knowledge that is not always easy to turn into instructions for AI.

Let me show you what this looks like in practice.

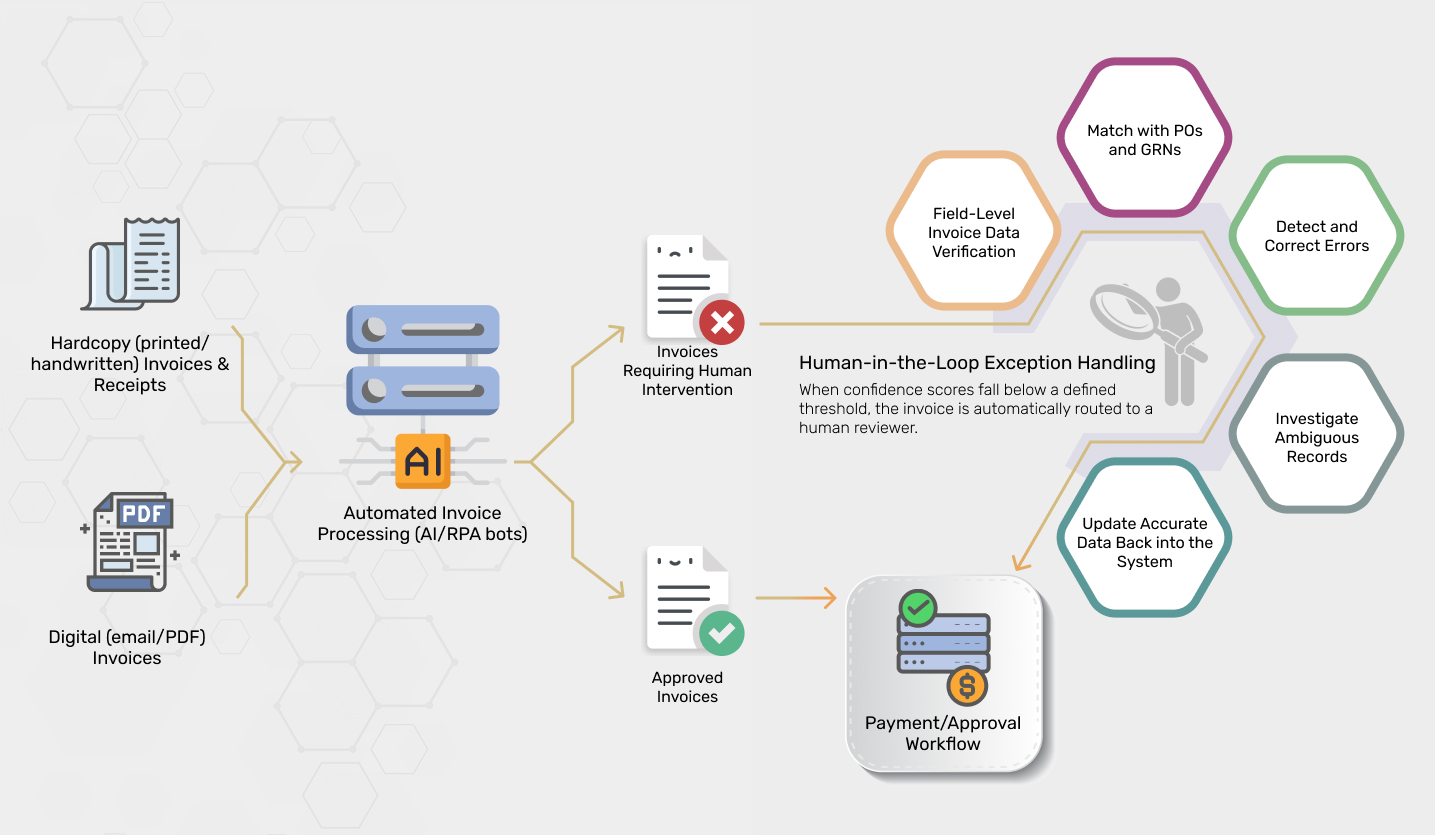

We worked with a healthcare client processing tens of thousands of invoices monthly. They initially deployed an AI-powered system that promised faster invoice processing with minimal human intervention. The AI handled volume beautifully. It extracted data fast, matched invoices to purchase orders, and flagged discrepancies. But it kept creating exceptions that compounded into massive backlogs. Like, it kept flagging legitimate duplicate procedures or "correcting" intentional vendor name variations, then adding a low confidence score to that field and categorizing it as an exception.

So, we aligned some subject matter experts who’d immediately clear the exception through invoice data validation and cross-referencing information from multiple sources. The results: 99.95%+ accuracy, 80% reduction in backlogs, and zero compliance violations.

What You Should Do Next: A Framework for Data Quality Management in AI Operations

Source- SunTec India | The Necessity for Humans in the Loop of Generative AI

Before you automate your next data process—whether it's enrichment, cleaning, classification, or validation—ask yourself:

- Can you trace every change the AI makes?

Six months from now, when an error surfaces, can you identify which AI model touched that data? Which version? What logic it applied? If not, you can't fix systemic problems—you can only find them one record at a time.

- Can you reverse it if something goes wrong?

If your AI "cleaned" 50,000 customer records and you discover it hallucinated phone numbers, can you roll back those changes? Or are they permanently mixed into your system with no way to separate AI modifications from verified data?

- Do you know when it's wrong before it enters production?

Does your system flag uncertainty and route it to human review? Or does AI make confident-looking changes that sail through validation checks until real-world consequences expose the errors?

If you can't answer yes to all three, you're not ready to remove human oversight.

If You are Buying AI Software Tools or Subscriptions

Understand the Real Cost of "Fast and Cheap"

An AI platform promising 40% efficiency gains through "full automation" isn't delivering value—it's transferring risk to your organization. When that tool hallucinates phone numbers, merges records incorrectly, or confidently fills data gaps with fiction, the consequences and cleanup happen at your expense.

The uncomfortable questions to ask your software vendor before you deploy:

- "What happens when your AI is wrong?"

- "How do we identify which data your tool touched if we discover errors months later?"

- "Can we reverse changes your AI made, or are they permanent once processed?"

If the vendor's answers are vague ("our accuracy rates are industry-leading"), defensive ("AI errors are extremely rare"), or dismissive ("you can always validate outputs manually"), you've learned everything you need to know: they're selling you a tool without accountability for its failures.

Know What to Demand from AI Tools

- Error transparency: What's the actual hallucination rate in production? Not marketing claims—real operational data from existing customers.

- Traceability: Does the tool log which data it modified and how? Can you export audit trails?

- Confidence scoring: Does it flag uncertain outputs, or does everything look equally confident?

- Rollback capability: If the tool processes 100,000 records and you discover systematic errors, can you undo its changes?

If a vendor can't or won't provide these capabilities, the tool isn't enterprise-ready—no matter how impressive the demo was.

If You are Outsourcing Data Services to External Providers

Demand Explicit Disclosure about AI Use

If you outsource data quality management, enrichment, or processing to third-party vendors, the Deloitte AI scandal should fundamentally change how you evaluate them. "AI-powered" is no longer a selling point—it's a red flag that demands scrutiny.

Require your Service Provider to Specify

- Which specific AI tools or models they use

- For what exact purposes will they use AI in your data workflow

- What verification process follows AI outputs before delivery

"We use AI for data extraction and pattern detection, followed by specialist validation of all exceptions before delivery" is accountability.

"AI-enhanced workflow" or "AI-assisted process" without specifics is a loophole.

Make Verification Requirements Contractual

Your service agreement should include documentation of:

- Which data points were AI-generated vs. human-verified

- What confidence thresholds trigger human review

- How exceptions are handled before the treated data enters your systems

- Audit trails showing who validated what and when

It Definitely Pays to be Transparently “Humans-in-the-Loop”

As a service provider, I can stand firmly behind this statement. We practice what we're preaching.

SunTec India has been an ardent advocate of expert-verified AI workflows ever since we adopted a human-in-the-loop approach across our services. We don't hide it. We lead with it.

Take invoice processing—one of our core workflows. We don't just mention HITL in our service descriptions but detail it explicitly (AI extracts invoice line items, specialist validates amounts against PO, discrepancies are flagged for manager review) in every client communication, every proposal, and every delivery report.

So, our clients know where the real risk lives.

Source- SunTec India | Closing Accuracy Gaps in Healthcare AP Automation Software

I’ll give you another example – when we accept a B2B data enrichment project, clients understand exactly how we use AI to extract data or flag anomalies, and how we route exceptions to subject matter experts. We prove through sample tasks that humans validate every extracted data field against source documents, resolve discrepancies, and sign off on accuracy before data enters client systems.

It changes the conversation from “efficiency at any rate” to “accountability with accuracy”. When clients understand that a trained specialist verified their data—not just an algorithm—they trust the output. When problems surface, we can trace exactly where the human checkpoint caught or missed the issue. That's professional accountability that protects both parties.

The business case is simple: We're faster than pure manual processing and more reliable than pure AI. Clients pay for speed and accuracy. We deliver both because we refuse to eliminate the human checkpoint that ensures quality.

But I digress. The question isn't whether to use AI. It's whether you have the discipline to use it responsibly.

If You Deployed AI in the Last 12 Months, Answer This Honestly

Can you produce an audit trail showing which data your AI modified, when, and who verified it? If auditors or regulators asked tomorrow, could you demonstrate your validation process in 5 minutes or 5 days?

If the answer makes you uncomfortable, you already know what needs to change.

AI is a powerful tool. It's also a loaded weapon when deployed without human oversight. The firms that get ahead of this shift—that build transparent, verified AI workflows now—will own the market. The firms that continue automating without accountability will face the same choice Deloitte did: refund the contract and repair the damage.

The Deloitte AI report scandals were not a technology problem. They were decisions to trust automation over verification. That same decision is being made in boardrooms right now—possibly yours. You must pause and reconsider, because the next $440,000 mistake might be yours—and unlike Deloitte, you might not survive it.

Rohit Bhateja

Rohit Bhateja, Director of Digital Engineering Services and Head of Marketing at SunTec India, is an award-winning leader in digital transformation and marketing innovation. With over a decade of experience, he is a prominent voice in the digital domain, driving conversation around the convergence of technology, strategy, customer experience, and human-in-the-loop AI integration.