A Fortune 500 retailer made what seemed like a smart move in early 2025: they cut their 85-person data quality team (saving $6.8M annually) and deployed an AI-powered data management platform instead. The ROI projections looked spectacular.

By October, their quarterly earnings call included an unexpected line item: $47 million in "operational adjustments and data remediation costs." Here's what actually happened:

- 14,800 shipments went to wrong addresses because AI "standardized" apartment numbers out of existence ($2.9M in reshipments and credits)

- The CRM auto-updated 180,000 customer income brackets based on demographic inference, triggering luxury campaigns to budget shoppers (12% opt-out rate, $8.2M in lost lifetime value)

- AI merged duplicate vendor records but corrupted payment history—system showed 6,000 already-paid invoices as "unpaid" and reprocessed them ($30M double payments, $19M recovered after 4 months, $11M net loss plus $2M in legal/accounting recovery costs)

- Compliance audit discovered 40,000+ records modified without proper trail ($4.8M in penalties and external audit fees)

Their "savings": $6.8M. Their actual cost: $47M. That's a 7x loss, and a mess that my data team is still cleaning up.

Which is why I want to stress that companies must look beyond the noise around AI and focus on what really matters for enterprise data quality, as AI in data management gathers hype.

The Numbers Behind the Hype of AI Data Management Solutions (and the Reality)

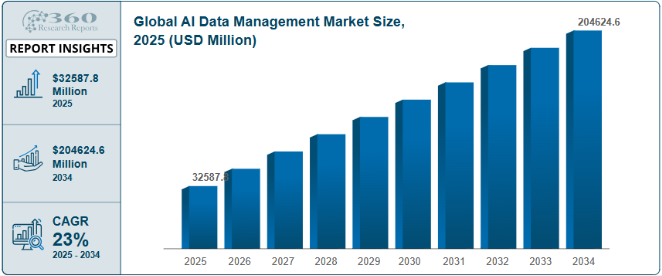

Enterprise investments in AI data platforms rose by 43% between 2023 and 2025. The global AI data management market is projected to grow from USD 32.6 billion in 2025 to USD 204.6 billion by 2034. Nearly 30% of enterprise AI budgets are being spent on data management solutions (data governance, privacy, and accessibility). And reportedly, 73% of companies have adopted AI-driven systems to 'optimize' their data handling.

Source- 360ResearchReports | AI Data Management Market Size, Share, Growth, and Industry Analysis

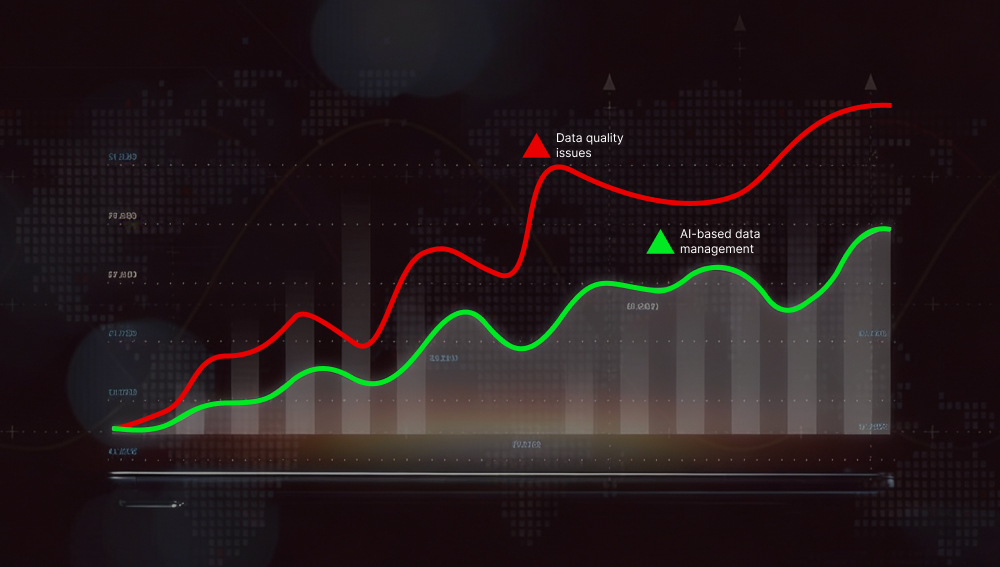

But here's what no one’s telling you: AI is becoming part of the problem, not the solution.

Here’s what that staggering investment in AI for data quality management is actually buying, as per the 360ResearchReports analysis:

- 34% of core workflows remain broken.

- 39% solutions run into compatibility problems.

- Half the organization still doesn’t trust the system with sensitive data.

- Data breach risk goes up by 35%.

Bottom line: Using AI for data management does not solve your data problems. It disguises them behind a dashboard and adds new attack vectors. What’s worse – by the time you discover the problems, the cost of cleaning up the mess, or paying penalties for non-compliance, can easily exceed whatever you “saved” with AI.

AI Can't Solve What AI Doesn't Understand

Data is messy because your organization is messy, and AI will just automate the mess.

The fundamental problem with AI-powered data quality solutions is simple: they treat symptoms, not causes.

Consider a typical data quality issue in your organization right now. Perhaps customer records are duplicated across systems. An AI tool will identify the duplicates, maybe even merge them. Problem solved, right?

Not even close.

What the AI sees: Three records for "John Smith" with similar contact information—obvious duplicates that need merging.

What the AI doesn't see (the real problem):

- Your Sales team enters new leads in Salesforce because that's "their" system

- Marketing automatically imports web form submissions into HubSpot because that's "their" system

- Customer Service creates fresh records in Zendesk when customers call because that's "their" system

So the AI merges the three John Smith records on Monday. By Tuesday, Sales has created a new lead, Marketing has imported a new contact record from another web form, and Customer Service has opened a new ticket with a new contact data field. You're back to three duplicates.

The AI didn't solve anything—because the root cause isn't that you need better duplicate detection. The root cause of this data quality issue is that you have three departments operating in silos, using incompatible systems, and no single source of truth.

Here's What Actually Causes Data Quality Problems for a Typical Enterprise:

- Unclear ownership - Nobody knows who's responsible for master customer data.

- Process gaps - No standardized workflow for data entry across regions.

- Competing definitions - The baseline data doesn’t match because every department defines data fields as per their processes.

- Legacy systems - Data siloes that no one wants to centralize due to the implied risk.

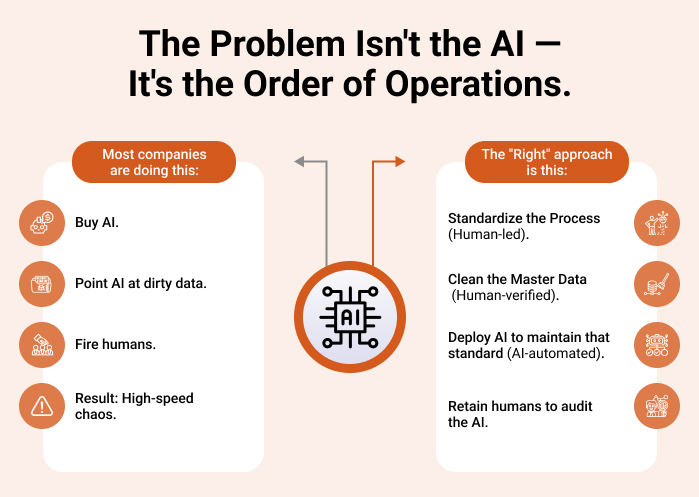

Notice that NONE of these problems can be fixed by AI. These are organizational problems that require human decisions and process changes. AI tools can only work AFTER you've solved these issues.

The Real Danger of AI in Data Management: Plausible Errors that Cost Millions

The most dangerous AI errors aren't the obvious ones. They're the plausible ones—changes that look correct on the surface but are catastrophically wrong in context.

The Million Dollar Marketing Campaign

One of our clients using an AI data management platform faced this situation: the system updated a customer's income bracket from $75K to $125K based on demographic inference. The number looked reasonable, so nothing flagged it. Their marketing team sent luxury product promotions to budget-conscious customers, many of whom unsubscribed, annoyed by irrelevant offers.

Imagine yourself in their shoes and multiply this by whatever your total customer count is. You've just:

- Lost perhaps a majority of them (and incurred their related lifetime cost)

- Wasted possibly thousands in targeted ad spend on the wrong audience

- Suffered from damaged brand perception

But, here is what I feel is the worst part of this: you would never connect the dots to the AI data management solution unless there is a very detailed scrutiny/audit.

Bad Data Cascading through the System

Picture this: your AI data quality management system "standardizes" addresses like "123 Main St, Apt 4B" to "123 Main Street." Why would the AI do this? Because apparently it was trained primarily on single-family home addresses where unit designators are rare. When it validates against USPS databases, it finds '123 Main Street' to be a valid address and sees 'Apt 4B' as inconsistent formatting to be removed (which is something no one but the vendor selling that AI would know.) Your system accepts this change because the address looks valid even though it is not the complete address. Thus, the package gets delivered to the building lobby, not apartment 4B.

This is a case from another one of our recent clients. This single plausible error led to several downstream failures for them:

- The customer called: "Where's my order?" (10 minutes of support time, $12 cost)

- Support investigated, expedited replacement shipment ($28 shipping cost)

- Original package sat in the lobby for 3 days, and was eventually returned ($14 return shipping)

- Customer frustration led to a negative review (which impacted several potential customers' purchase decisions)

- The operations team spent 30 minutes investigating "delivery issues" and updating procedures

The retailer we were working for shipped to 250,000 apartment addresses in 6 months, and this happened to nearly 15000 of them - a 6% error rate that incurred over $1.2M just in direct shipping costs. If we look at customer churn, support load, and operational overhead, the true cost would be closer to $3M.

This is the hidden threat of AI data management: errors that look correct but cascade through your systems, multiplying costs with each downstream failure.

AI Instead of People: The False Trade-Off

In the rush to build "cost-efficient" models, organizations are actively swapping institutional knowledge for algorithms. The market trends make this very clear: capital is flowing into AI valuation while flowing out of human payroll.

- HP plans to cut about 6,000 jobs by 2028 [Reuters] while ramping up its AI efforts.

- Cybersecurity software maker CrowdStrike is letting go of 500 employees, [CNBC] about 5 percent of its workforce, as it doubles down on AI.

- Salesforce has removed roughly 4,000 customer support roles [CNBC], replacing them with AI bots.

- McKinsey is cutting about 200 internal tech roles [Bloomberg] as part of an AI-driven overhaul of its support functions.

- xAI has reportedly laid off at least 500 data annotation workers [Reuters] who helped train its Grok chatbot.

The assumption that AI can take over a large share of the work people used to do is getting stronger, and the layoff announcements reflect that belief clearly, because they are largely attributed to AI.

"AI flattens our hiring curve and helps us innovate from idea to product faster."

- George Kurtz, CEO, CrowdStrike

"Because of the benefits and efficiencies of Agentforce (customer service bots), we no longer need to actively backfill support engineer roles."

- Marc Benioff, CEO, Salesforce

Meanwhile, AI startup valuations are jumping two to three times within just a few months, driven by repeated funding rounds. Anthropic is a good example. In March, it closed a $3.5 billion Series E round at a valuation of $61.5 billion. By September, after a $13 billion Series F, that valuation had jumped to $183 billion [Source: Fortune].

But Here Is the Illusion of Savings: When You Fire the Human, You Fire the Context

Because organizations are stripping away the only layer of defense capable of understanding nuance—the human—and replacing it with a machine that prioritizes confidence over accuracy, they are turning messy but fixable problems into invisible catastrophes.

A human data steward might hesitate: "Wait, this contract renewal has pricing from the old package. Should I verify with the account manager before updating?"

The AI has no hesitation. Its goal is record completeness and processing speed. It sees the inconsistency, determines the old pricing is most common for this account type, and automatically updates it—without verification.

This is where the math falls apart, even though the dashboard shows high confidence, high completion rate, and low processing time. Leadership sees efficiency gains now, and yet, six months later, you discover hundreds of customers were billed incorrectly. Now you're facing refunds, compliance reviews, and customer trust issues that far exceed any efficiency savings.

The Hidden Cost of AI Data Management: Why "Savings" Become Catastrophic Losses

If you only look at what you stopped paying in salary and not what you started paying in hidden cleanup costs, you're not measuring savings—you're measuring wishful thinking.

Here's the actual cost structure of AI-first data management:

Upfront Investment (What You Track)

- AI platform licensing (Let’s assume $500K-$5M+ annually for any decent to leading AI data management solution)

- Integration and implementation (presumably 6-18 months, $1M-$10M)

- Training and change management

- Reduced headcount (looks like savings)

Hidden Ongoing Costs (What You Don't Track Until It's Too Late)

- Operations cleanup (shipping errors, redeliveries, customer service escalations)

- Compliance risk (audit failures, documentation gaps, potential penalties)

- Customer churn (lost lifetime value, acquisition costs to replace)

- Data correction (human teams fixing AI mistakes)

- System maintenance (managing AI outputs, adjusting rules)

- Reputation damage (hard to quantify, impossible to ignore)

Most organizations track the first list. Almost none properly track the second.

The Hype around AI Data Management Will Soon Face a Harsh Reversal

Here's what I sense we're going to see within the next 12-18 months:

Quiet press releases about "organizational restructuring" and "strategic workforce investments." What they really mean: "We're rehiring the humans we fired because AI destroyed our operations."

Companies won't admit the mistake publicly. You'll see it in the job postings: "Senior Data Steward" and "Data Quality Manager" roles suddenly appearing at companies that eliminated those positions 12–18 months earlier. You'll see it in the contractor invoices for "data remediation projects" and "emergency compliance reviews."

The signs are already here:

- Forrester's "Predictions 2026" report states that half of AI-attributed layoffs are expected to be reversed.

- 55% of employers regret laying off workers because of AI.

- An MIT study published in Fortune found that around 95% of generative AI pilots are failing, with only about 5% of companies that went “all in” on AI seeing measurable profit.

Among the people actually responsible for AI investment decisions, 57% expect AI will increase their headcount over the next year while only 15% expect decreases.

"We didn’t miscalculate what AI could do. We underestimated what only humans can do."

- Former IBM HR Executive (2024)

Businesses are learning the hard way that replacing people with AI without fully understanding the impact on their workforce can go badly wrong.

- Oliver Shaw, CEO, Orgvue.

What to Do If You're Already in Too Deep

If you're already committed to AI data management tools:

Audit what your AI has changed

Pull logs for the last 30-90 days. What fields has it modified? What records has it merged? Do spot checks on 50-100 records to verify the changes make sense. Look specifically for plausible errors—changes that look reasonable but are contextually wrong.Establish verification protocols

For high-impact changes (customer contact info, financial data, compliance-critical fields), mandate human review before AI commits changes. Yes, this reduces automation efficiency. It also prevents million-dollar mistakes.Document your data standards

If you can't write down what "correct" looks like, your AI definitely can't figure it out. Define clear, unambiguous standards for every field the AI touches. Include the why, not just the what. Remember, when working with AI, context matters the most.

If you're considering bringing in AI data quality management tools:

Fix your processes first

Document ownership, standardize workflows, unify definitions. Break down the silos. If you can't do this, AI will just automate the systemic dysfunctions faster and at a greater scale.Start with maintenance, not transformation

Use AI to flag anomalies and maintain standards you've already established. Don't use it to "fix" problems you haven't properly diagnosed. AI excels at pattern recognition within well-defined parameters—not at understanding organizational complexity.Keep the humans who understand context

Your senior data stewards, your business analysts who know why the data looks the way it does—these people are your defense against plausible errors. Letting them go to fund AI is penny-wise and pound-foolish.

Blending AI with Human Experience Is Your Best Shot

Institutional knowledge can't be replaced with pattern recognition.

Before you move ahead with AI data management solutions, ask yourself three questions:

- Can you trace every change the AI makes?

- Can you reverse it if it's wrong?

- Do you know when it's wrong?

If you can't confidently answer yes to all three, you're not ready for AI data management. You have likely not considered the real cost of automation without accountability. You are very likely in for a million-dollar lesson in why organizational problems can't be solved with algorithmic band-aids.

I've watched companies make these mistakes. The $47M cleanup costs. The duplicate payments that took months to recollect. The "operational adjustments" that obliterated quarterly earnings. Despite these consequences, the industry continues to sell speed and efficiency under the guise of AI data management solutions, sacrificing accuracy.

Amidst these circumstances, the survivors won't be the companies with the best AI data management tools. They'll be the ones who never fell for the "AI can do it all" narrative in the first place. The ones who understood that AI is a powerful tool for maintaining standards that humans establish, not a replacement for the humans who understand why those standards exist.

Pavan Kakar

Pavan Kakar, Associate Vice President of International Sales at SunTec India, is an experienced professional in the data services domain with over two decades of global experience. He drives enterprise growth across 50+ countries and is a recognized opinion leader for data-driven innovation, human-in-the-loop AI, and business intelligence.