Overcoming Image Labeling Complexity with Intensive Training and Custom Automation

A team of 20 image labeling professionals (13 annotators, 4 QA specialists, 2 SMEs, and a project coordinator) was aligned with this client. Given the technical complexity and initially undefined edge cases, we brought in domain experts (professional annotators with experience in geospatial or scientific image analysis) who collaborated with the client to establish clear criteria for ambiguous scenarios and address new edge cases as they emerged.

Two Weeks of Intensive Training to Ensure High Labeling Accuracy

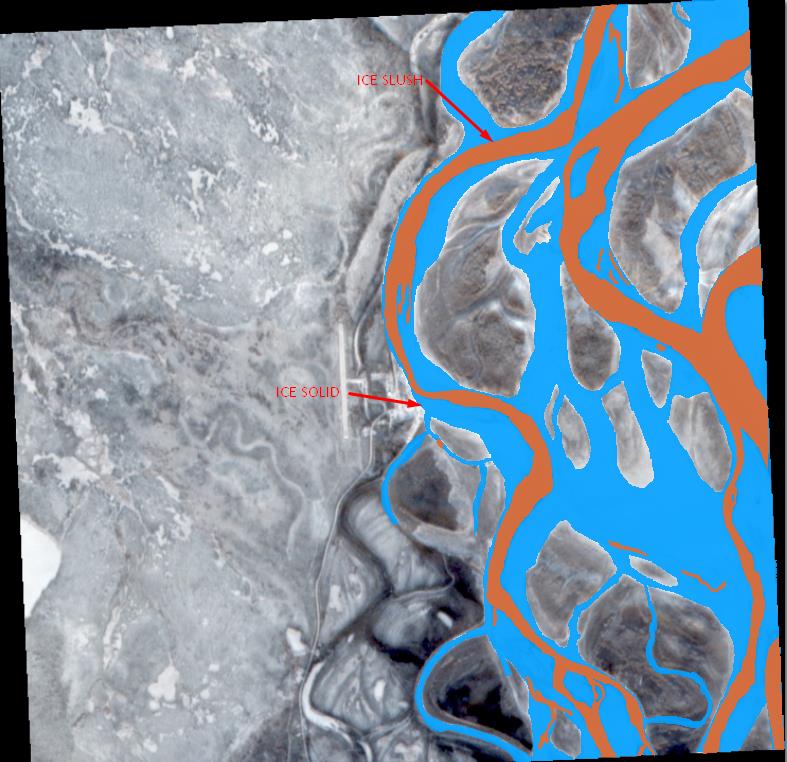

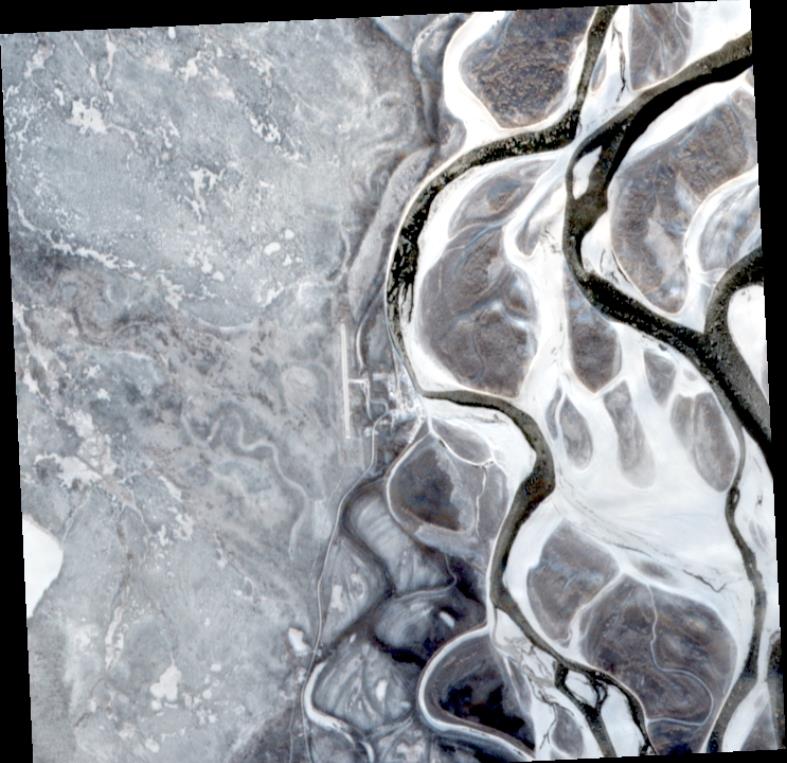

The first week of training covered satellite imagery fundamentals, ice formation science, and RGB interpretation. Under subject matter experts, the data labeling team learned to identify specific visual indicators that differentiate ice-solid from ice-slush (texture patterns, tonal gradients, boundary sharpness, reflectivity differences, etc.). They were also introduced to edge-case scenarios with reference examples categorized by difficulty level.

In week two, our team worked on 300 practice images selected by SMEs to represent the full spectrum of complexity. Each annotator's work was compared against SME "ground truth" annotations to identify and correct interpretation gaps. The decision criteria for each labeling and edge case were documented, and a comprehensive reference was assembled with visual examples, decision trees, and troubleshooting guides.

Image Annotation Platform Setup – CVAT

We used CVAT for image annotation, as it has proven highly effective for labeling tasks that require pixel-level precision (in this case, along irregular shorelines and ice boundaries), based on our experience in image as well as video annotation projects. Our team customized CVAT to match the client’s requirements.

- Custom Keyboard Shortcuts: Configured single-key class switching (W for Water, S for Ice-Solid, L for Ice-Slush), reducing annotation time by approximately 15-20% by eliminating repeated menu navigation.

- Color-Coded Class Visualization: Assigned contrasting colors to each class (blue for Water, green for Ice-Solid, white for Ice-Slush) to make misclassifications immediately visible during annotation.

- Zoom Presets: Created standardized zoom levels (100%, 200%, 400%) for consistent edge boundary work across all annotators.

- Annotation Templates: Pre-loaded common edge cases as reference overlays that annotators could toggle on/off for guidance.

- Batch Loading Optimization: Configured image pre-loading to minimize wait times between annotations, keeping annotators in a focused workflow.

Sequential Dataset Segmentation

The dataset provided by the client was already divided into seasonal groups. We assigned a dedicated sub-team to each season’s dataset, allowing the team to develop familiarity with specific patterns within a particular group. We also rotated reviewers between seasonal teams so the annotation logic applied to one seasonal dataset aligned with the others, maintaining semantic consistency across all the images.

Annotation Drift Detection

Over time, repeated annotation tasks can lead to slight variations in how annotators interpret similar images, resulting in labeling drift. To prevent this, each batch of labeled data was systematically reviewed and compared against previous outputs.

When inconsistencies were identified (for example, differing treatment of melting ice edges between spring and early summer), subject matter experts refined and updated the annotation guidelines to maintain conceptual consistency.

Eventually, to expedite this process, we built an automated annotation drift detection script. It analyzed clusters of related images (for example, 10 winter images of the same river bend from the same location and season) to identify subtle shifts in labeling behavior across time or between annotators. Any detected anomalies were flagged for expert review, ensuring uniformity and reliability in the final dataset.

Semi-Automated Edge Detection

Accurately tracing complex class boundaries had quickly become the most time-consuming aspect of this project, so we implemented a computer vision-based edge detection solution. The algorithm analyzed RGB gradients to identify sharp transitions between water, ice-solid, and ice-slush regions (sudden changes in color or brightness between neighboring pixels).

These computer-generated boundary suggestions were displayed to annotators in CVAT, allowing them to accept, refine, or override the automated outlines, reducing manual tracing time by approximately 30% while maintaining human oversight for accuracy.

Raw image

Annotated image