A CV-Powered Security Analytics Platform for Real-Time, Cross-Site Monitoring

We designed a multi-tenant computer vision security platform to automate real-time monitoring, filter out false positives, and standardize analytics across heterogeneous sites. The platform runs edge-based inference to operate within limited bandwidth and storage while processing data locally to achieve minimum latency.

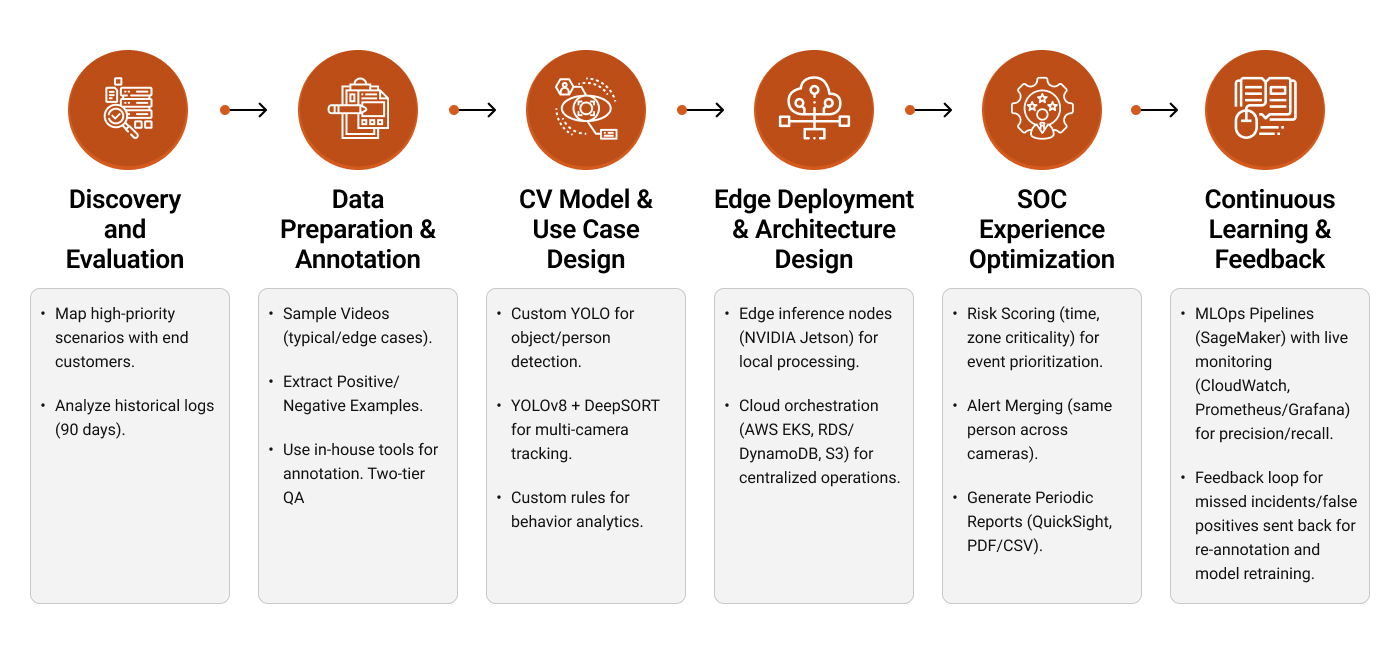

Workflow of the solution

Discovery and Evaluation

We started with a 4-week discovery phase:

- Worked closely with operations leads and three representative end customers to map high-priority security scenarios and current incident patterns.

- Analyzed 90 days of historical incident logs to understand where manual monitoring failed.

- Selected a representative subset of 400 cameras across 12 sites, covering indoor corridors, loading bays, parking lots, lobbies, and high-value zones, to serve as the initial training and validation cohort.

Data Preparation and Annotation

The client also had petabytes of archived footage. However, it was not accurately annotated for model training. So we compiled all the data and set up a data pipeline for the following:

- Sampling videos for typical activity and edge cases (night shifts, rain, varying lighting).

- Extracting both positive and negative examples so the models could learn subtle distinctions, such as a visitor escorted by staff versus a genuine tailgating event.

-

Annotating the curated dataset to label:

- Persons, vehicles, and objects

- Specific PPE items (helmets, high visibility vests)

- Zones of interest (restricted areas, exits, fence lines, parking slots)

- Interaction labels such as “person entering secure zone,” “person without helmet in PPE zone,” “object left behind,” and “multiple persons through single access event”

All annotations went through a two-tier QA process to reach target agreement rates above 98 percent on key classes.

CV Model and Use Case Design

Using the annotated datasets, we implemented a modular CV model stack with custom-trained models tailored to specific use cases.

- Object and Person Detection: A customized object detection model (based on a modern YOLO variant).

- Multi Camera Tracking: A tracking layer based on a YOLOv8 detector combined with a DeepSORT-style multi-object tracker to follow individuals across multiple cameras within a site.

-

Zone and Behavior Analytics: Custom rules and micro models on top of the YOLOv8 + DeepSORT stack to interpret behavior:

- Perimeter breaches when a person crosses a virtual fence

- Tailgating when the count of people entering exceeds the authorized number

- Loitering based on dwell time thresholds in sensitive zones

- Abandoned objects when an object remains in place after its associated person has left the frame

- Anomaly Detection for Unknown Patterns: For areas with complex traffic patterns, we added an unsupervised anomaly detection component that learned “normal” motion flows over time and flagged deviations.

Edge Deployment and System Architecture Design

Given the client’s scale and latency requirements, we designed a hybrid edge cloud architecture:

- Edge Inference Nodes: We deployed containerized inference services on NVIDIA Jetson class devices installed in each site’s network to ingest data streams from local cameras. Jetson was used to make use only structured events with low bitrate were passed on to the cloud.

-

Cloud Orchestration: We hosted the central security analytics platform on AWS, with:

- Containerized services on Amazon EKS

- Alert and configuration data stored in Amazon RDS/DynamoDB

- Event media archived in Amazon S3.

All of these were exposed using secure APIs and web dashboards.

- Resilience and Bandwidth Control: If cloud connectivity dropped, edge nodes continued to run detection and buffered critical events locally. We also configured for adaptive streaming and event batching to keep bandwidth consumption within desired limits.

Workflows and SOC (Security Operations Center) Experience Optimization

The goal was not only better detection but also better decision-making by human operators. The following was done for the same:

- Each event (for example, “PPE violation in Bay 4”) was assigned a risk score based on time, zone criticality, and event type so operators could prioritize accordingly.

- We implemented custom logic to merge related alerts, such as multiple detections of the same person loitering across adjacent cameras.

- We configured the platform to generate periodic reports for end customers using Amazon QuickSight and scheduled exports (PDF/CSV) from Amazon S3/RDS.

Continuous Learning and Governance

To ensure reliability at scale, we implemented SageMaker-based MLOps pipelines with live performance monitoring through CloudWatch and Prometheus/Grafana. Missed incidents and false positives were fed back for re-annotation and periodic retraining, with updated models rolled out gradually. We also built industry-specific configuration templates so new sites could be onboarded quickly with baseline analytics tailored to their environment.